CVPR2024: 5th Workshop on Robot Visual Perception in Human Crowded Environments.

The JackRabbot Open-World Panoptic Segmentation & Tracking Dataset and Benchmark

The JackRabbot Open-World Panoptic Segmentation & Tracking Dataset and Benchmark

Our workshop happened on June 18th, 2024, 1:30 PM to 5:30 PM, room Arch 210. This is a hybrid workshop. Check out our recording.

Welcome to the 5th workshop in the JRDB series! Our workshops are designed to tackle perceptual problems that arise when autonomous robots operate and interact in human environments including human detection, tracking, forecasting, and body pose estimation, as well as social grouping and activity recognition.

In this workshop, we are excited to explore the challenging task of Open-Word Panoptic Segmentation and Tracking in human-centred environments. We will introduce a new competition that challenges participants to develop models capable of accurately predicting panoptic segmentation and tracking results using the JRDB dataset. We have also invited speakers from the field of visual perception and robotics to offer valuable insights into understanding human-centred scenes.

We invite researchers to submit their papers addressing topics related to autonomous robot in human environments. Relevant topics include, but not limited to:

Full papers are up to eight pages, including figures and tables, in the CVPR style. Additional pages containing only cited references are allowed. For more information, please refer to the guidelines provided by CVPR here. Extended abstracts should also adhere to the CVPR style and are restricted to a maximum of one page, excluding references. Accepted papers have the opportunity to be presented as a poster during the workshop. However, only full-papers will appear in the proceedings. By submitting to this workshop, the authors agree to the review process and understand that we will do our best to match papers to the best possible reviewers. The reviewing process is double-blind. Submission to the challenge is independent of the paper submission, but we encourage the authors to submit to one of the challenges.

Submissions website: here. If you have any questions about submitting, please contact us here.

Submit to our WorkshopHumanFormer: Human-centric Prompting Multi-modal Perception Transformer for Referring Crowd Detection

Heqian Qiu, Lanxiao Wang, Taijin Zhao, Fanman Meng, Hongliang Li

InViG: Benchmarking Open-Ended Interactive Visual Grounding with 500K Dialogues

Hanbo Zhang, Jie Xu, Yuchen Mo, Tao Kong

Must Unsupervised Continual Learning Relies on Previous Information?

Haoyang Cheng, Haitao Wen, Heqian Qiu, Lanxiao Wang, Minjian Zhang, Hongliang Li

GM-DETR: Generalized Multispectral DEtection TRansformer with Efficient Fusion Encoder for Visible-Infrared Detection

Yiming Xiao, Fanman Meng, Qingbo Wu, Linfeng Xu, Mingzhou He, Hongliang Li

Pre-trained Bidirectional Dynamic Memory Network For Long Video Question Answering

Jinmeng Wu, Pengcheng Shu, HanYu Hong, Ma Lei, Ying Zhu, Wang Lei

DSTCFuse: A Method based on Dual-cycled Cross-awareness of Structure Tensor for Semantic Segmentation via Infrared and Visible Image Fusion

XUAN LI, Rongfu Chen, Jie Wang, Ma Lei, Li Cheng

Is Our Continual Learner Reliable? Investigating Its Decision Attribution Stability through SHAP Value Consistency

Yusong Cai, Shimou Ling, Liang Zhang, Lili Pan, Hongliang Li

The challenge includes:

Each challenge submission should be followed by an extended abstract or full paper submission via our paper submission portal (see details above) or a link to an existing preprint/publication. The top 3 will have the opportunity to present their work as a spotlight (5 minutes) in the workshop.

Submissions will be evaluated based on:

All methods are evaluated on stitched images from the dataset at 1Hz (every 15th images). Participants can also use individual camera views for training, supported by provided camera calibration parameters and stitching code. For more details on the dataset, visit JRDB-PanoTrack dataset.

The best submission from each account will be displayed automatically during challenge period, encouraging competition and showcasing leading-edge methods.

| PST Time | Speakers | Topic |

|---|---|---|

| 1:30 - 1:40 | Organisers | Introduction |

| 1:40 - 2:10 | Laura Leal-Taixe | Towards segmenting anything in Lidar |

| 2:10 - 2:30 | Organisers | JRDB dataset, annotations, and challenges |

| 2:30 - 3:15 | Break - Poster session | |

| 3:15 - 3:45 | Deva Ramanan | Self-supervised learning of dynamic scenes from moving cameras |

| 3:45 - 4:15 | Rita Cucchiara | Embodied Navigation by visual and Language interaction with objects and people |

| 4:15 - 4:45 | Bolei Zhou | UrbanSim: Generating and Simulating Diverse Urban Spaces for Embodied AI Research |

| 4:45 - 5:15 | Michael Milford | Introspection, localization, and similar scene inference for autonomous systems |

| 5:15 - 5:30 | Organisers | Conclusion |

Full Professor at University of Modena and Reggio Emilia, Italy

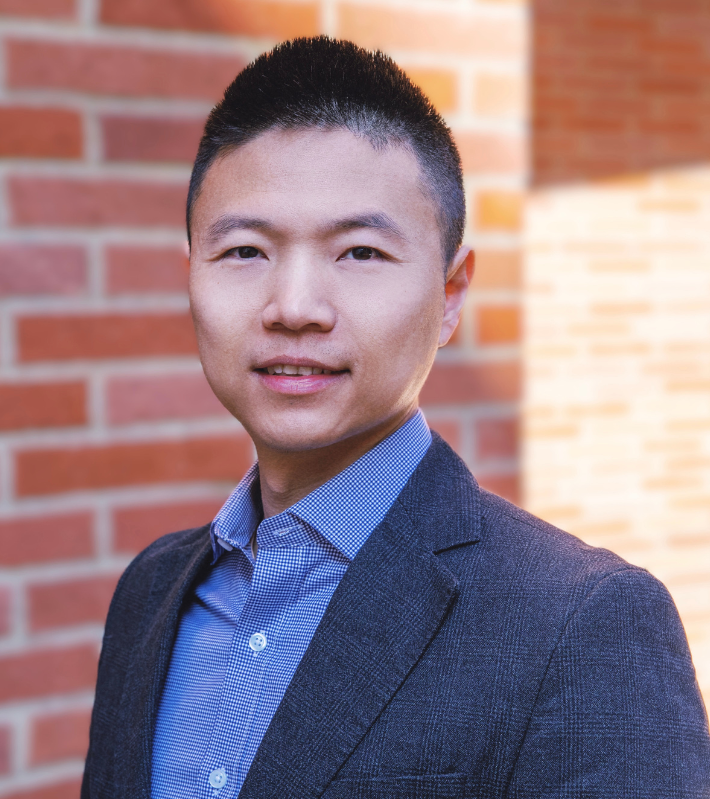

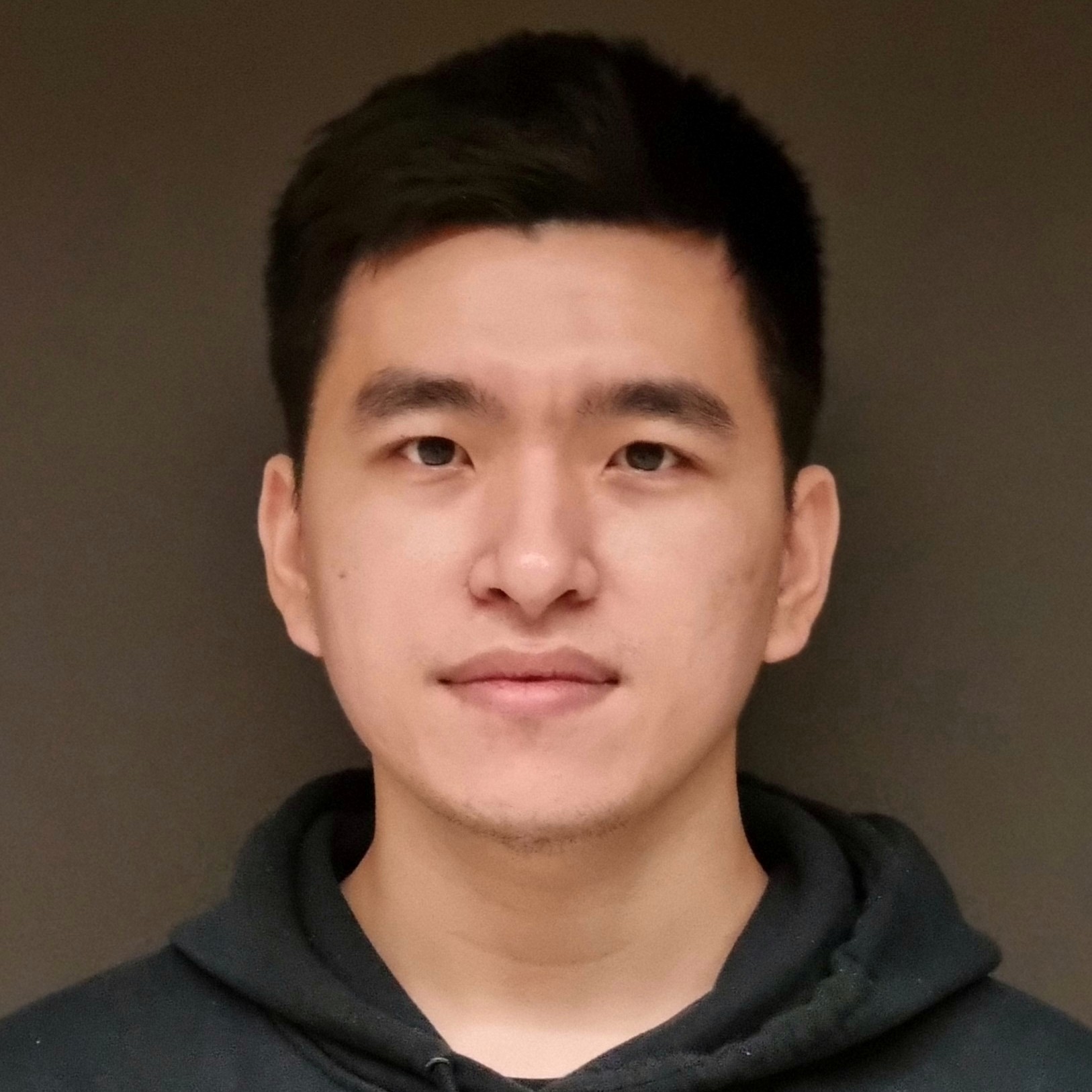

NVIDIA | Adjunct Professor at Technical University of Munich

Professor at Carnegie Mellon University, USA

Assistant Professor at University of California, Los Angeles, USA

Professor at Queensland University of Technology, Australia

| Name | Organization |

|---|---|

| Apoorv Singh | Motional |

| Gengze Zhou | The University of Adelaide |

| Haodong Hong | The University of Queensland |

| Houzhang Fang | Xidian University |

| Jiarong Guo | Hong Kong University of Science and Technology |

| Jin Li | Shaanxi Normal University |

| Michael Wray | University of Bristol |

| Mingtao Feng | Xidian University |

| Qi Wu | Shanghai Jiao Tong University |

| Shuai Guo | Shanghai Jiaotong University |

| Shun Taguchi | Toyota Central R&D Labs., Inc. |

| Tiago Rodrigues de Almeida | Örebro University |

| Weijia Liu | Southeast University |

| Zijie Wu | University of Western Australia |

| Ziyu Ma | Hunan University |

Monash University

EPFL

The University of Adelaide

Monash University

Monash University

Monash University